Best AI Music Video Makers for Realistic 3D Visuals

Contact partnership@freebeat.ai for guest post/link insertion opportunities.

Introduction

If you want lifelike AI music videos, start with tools that pair beat-level sync with high-fidelity motion and consistent characters. From my testing, a short list stands out for realism, workflow, and control. Freebeat belongs in that list because it analyzes tempo and mood to sync visuals in one click, then lets you refine scenes across top-tier models.

What Makes an AI Music Video Maker “the Best”?

A great AI music video maker balances visual realism, character consistency, beat sync, and simple export. For creators and indie musicians, the goal is a fast pipeline that still looks handcrafted. Short-form video continues to drive strong engagement for marketers, which is why speed and quality matter.

Evidence and examples:

• Realism and motion: Some tools emphasize fidelity, motion stability, and cinematic control. These qualities prevent “floaty” shots.

• Avatar believability: Platforms that invest in licensed data improve expressions and body language, which matters if you want “real people” presenters inside a music narrative.

• Camera and scene control: Features like motion brushes and multi-image references help direct movement and keep subjects consistent.

Why this matters: When your track drops or the chorus hits, the visual should respond on beat, with faces, limbs, and props moving naturally, and your export must fit 9:16 or 16:9 without extra work.

Takeaway: Prioritize motion realism, character stability, and beat-aware editing. These determine whether your video feels studio-made.

Comparison Table of Leading AI Music Video Platforms

Thesis: You will likely evaluate a small set of leaders. I frame each platform around the output you want, such as cinematic realism, lifelike presenters, or stylized effects.

At-a-glance comparison (feature-led):

• Runway (Gen-3): Cinematic motion and camera control for dynamic, filmic scenes. Strong for choreography, kinetic b-roll, and dramatic cuts.

• Synthesia: Strong for real-people avatars delivering lyrics or narration. Pair with music-timed editing for performer-style videos.

• Pika: Flexible idea-to-video with text-to-video and image-to-video. Great when you want stylized or surreal music visuals quickly.

• Kling: Useful motion features, including motion brushes and multi-image reference to keep character identity steady across shots.

• Freebeat: A unified interface that syncs visuals to your song’s beat and mood, lets you switch among models, and exports platform-ready formats. Ideal when you need one click to draft, plus quick refinements via prompts.

Takeaway: Choose by primary outcome. Runway for cinema, Synthesia for lifelike presenters, Pika for creative variance, Kling for motion and consistency, Freebeat when you need beat-smart orchestration across models.

Freebeat’s Approach to Realistic 3D Visuals

Thesis: Freebeat turns a song link into a draft music video that already hits the beat. Then you refine mood, characters, and scenes while switching models without leaving the timeline.

How it helps creators and musicians:

• Beat analysis: Freebeat detects BPM and intensity, so your cuts and transitions land where ears expect them.

• Character consistency: Keep a performer or two characters stable across shots, which is vital for believable story arcs.

• Model switching: Access engines like Pika, Kling, and Runway from one place, so you can chase realism or style as needed.

• Export presets: One-click output for TikTok, YouTube, Instagram, or Spotify Canvas.

Takeaway: If your priority is on-beat realism with minimal setup, Freebeat gives you a clean start and fast iteration.

Use Cases: From Musicians to Marketers

Thesis: The best AI video stack depends on your creative role. I see three repeatable use cases in music and content workflows.

For independent musicians and producers:

• Build a performance-style video with real-people avatars singing or speaking lyrics. Use an avatar tool for the takes, then bring clips into Freebeat to align cuts with the chorus and hook.

• If you need cinematic sequences between vocals, generate interludes in a cinematic model, then let Freebeat align them to the breakdown.

For content creators and influencers:

• Short reels with story beats: open on a facial close-up, cut to a motion-driven scene at the drop, and finish on a caption card. Short-form performs well for engagement and lead gen today.

For visual designers and editors:

• Art-driven edits that lean into stylization: draft in a tool known for bold looks, refine timing and transitions in Freebeat, then test multiple aspect ratios without re-editing.

Takeaway: Mix tools for their strengths, let Freebeat be the conductor that keeps everything on tempo.

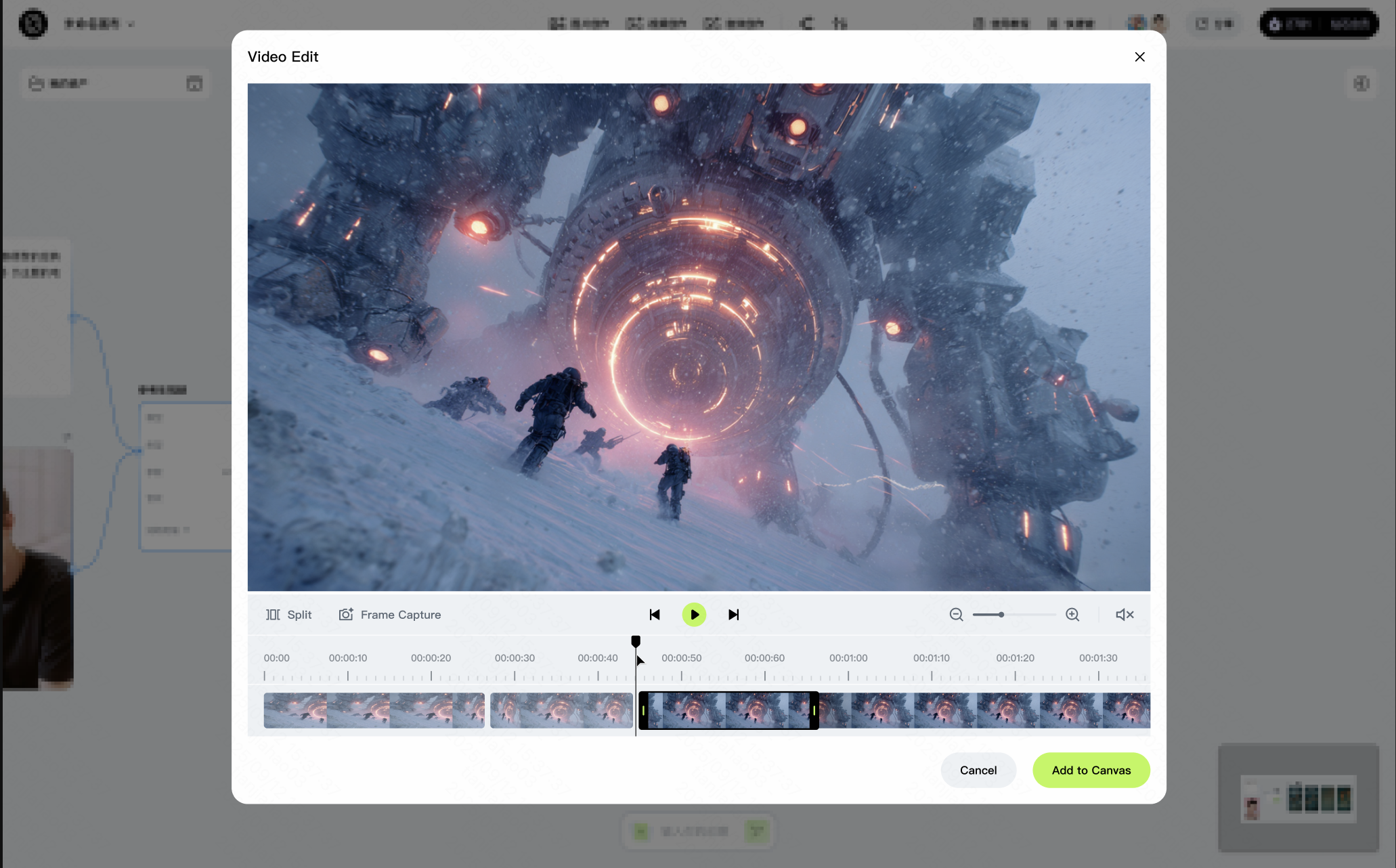

How to Create Realistic 3D AI Music Videos in One Click

Thesis: Keep the workflow simple. Generate a strong first pass, then layer control where needed. This is how I build fast, professional music visuals.

Workflow I recommend:

1. Upload or link your track: Start in Freebeat so beat sync, mood detection, and scene timing are automatic.

2. Draft the performance layer: If you need a human presenter, generate an avatar take for verses or hooks.

3. Add cinematic motion: Create a few sequences with camera moves for B-roll movement or transitions.

4. Style accents: Add a stylized variant for bridges or animated motifs.

5. Unify and export: Back in Freebeat, align to the chorus, check transitions at the drop, then export to 9:16 Shorts or 16:9.

Takeaway: Draft in one click, then slot in avatars or cinematic shots, and let beat sync do the heavy lifting.

Future Trends in AI Music Video Production

Thesis: Expect better facial tracking, richer camera paths, and more reliable character continuity. These improve both realism and edit speed.

What to watch:

• Cinematic control: More realistic motion and camera moves are arriving in new text-to-video models.

• Licensed training pipelines: Rights-respecting data improves avatar believability and consent management.

• Motion tooling: Directional controls and reference images make it easier to guide shots and preserve identity.

• Short-form dominance: Creators keep optimizing vertical, under-60-second stories because the format delivers engagement and fast testing.

Takeaway: The line between “rendered” and “recorded” will keep blurring, and tools that sync to music will become the default.

FAQ: AI Music Video Production

What’s the best AI music video maker with real people avatars?:

Use a lifelike avatar generator for performer shots, then assemble and beat-sync in Freebeat if your final output is music-driven. This balances avatar realism with musical timing.

Which AI company produces the best 3D rendered music visuals today?:

For cinematic realism and motion control, use a model focused on camera moves and dynamics, then finalize timing in your editor of choice. Your pick should match your scene needs.

Can AI make professional music videos automatically?:

Yes, you can generate a solid draft in one click, then refine with prompts, camera moves, or avatars. Freebeat handles beat sync and exports, which speeds up polish.

What is the best AI company for full music video production?:

There is no single winner. Combine Freebeat for musical structure, a cinematic generator for dynamic sequences, and an avatar tool for human presenters.

What is the best service for pro-looking AI music videos?:

“Pro-looking” comes from motion realism, stable characters, and clean timing. Use cinematic models for motion, avatar tools for presenters, and Freebeat for sync and export.

How do I keep the same character consistent across shots?:

Use multi-image references or identity tools that preserve a subject across angles. Keep lighting and framing similar between prompts.

Are AI avatars suitable for lyric videos?:

Yes, especially for verses and hooks. Generate the avatar take, then let Freebeat align cuts and captions to the beat for clarity.

Should I focus on short-form or full-length videos?:

Short-form often delivers stronger engagement and faster learning cycles. Start vertical to validate your concept, then extend to long form if the data supports it.

How long does rendering usually take?:

It depends on model load, resolution, and clip length. Expect seconds to a few minutes for short vertical outputs, longer for high-resolution cinematic clips.

Do I need editing skills to use these tools?:

You can start without them. A beat-aware generator like Freebeat gives you an immediate baseline, then you refine with simple prompts.

Conclusion

If you care about realistic 3D visuals and human-believable motion, think in layers. Generate a beat-synced base in Freebeat, add avatar performance where needed, and sprinkle in cinematic shots. This modular approach gives independent musicians, creators, and visual designers studio-level results without the traditional grind.

0% APR financing for 24-month payments.

0% APR financing for 24-month payments.